DeepSeek, a Chinese AI chatbot similar to OpenAI’s ChatGPT, is the most downloaded free app in the U.S. — but its swift rise to the top of the app store charts has raised potent privacy concerns at a time when the U.S. is banning TikTok over its ties to the Chinese government.

Like most apps, DeepSeek requires you to agree to its privacy policy when you sign up to access it — but you probably don’t read it.

In summary, “DeepSeek’s privacy policy, which can be found in English, makes it clear: user data, including conversations and generated responses, is stored on servers in China,” Adrianus Warmenhoven, a cybersecurity expert at NordVPN, said in a statement. “This raises concerns because of data collection outlined — ranging from user-shared information to data from external sources — which falls under the potential risks associated with storing such data in a jurisdiction with different privacy and security standards.”

Here’s the TL;DR from DeepSeek’s privacy policy:

-

It collects “Information You Provide”

-

Profile information like date of birth, username, email address, telephone number, password

-

The text, audio, prompt, feedback, chat history, uploaded files, and other content you provide to DeepSeek

-

Information when you contact them, like proof of identity or age and feedback or inquiries

-

-

It collects “Automatically Collected Information”

-

Internet and other network activity information like your IP address, device identifier, and cookies

-

Technical information like your device model, operating system, keystroke patterns or rhythms, IP address, system language, diagnostic and performance information, and an automatically assigned device ID and user ID

-

Usage information like the features you use

-

Payment information, which is self-explanatory

-

-

It collects “Information from Other Sources”

-

Log-in, sign-up, or linked services and accounts, like if you sign up using Google or Apple

-

Advertising measurement and other partners share user information with DeepSeek, such as what you’ve bought at their stores

-

What do “keystroke patterns or rhythms” mean?

DeepSeek’s privacy policy states that it collects “keystroke patterns or rhythms,” which might seem unusual but not completely uncommon. For instance, TikTok collects the same information, while Instagram does not.

DeepSeek didn’t immediately respond to a request for clarification from Mashable, but there’s a bit unknown regarding its keystroke data collection. We don’t know what DeepSeek will do with the information. For its part, TikTok has said that collecting “keystroke patterns or rhythms” specifically refers to the timing of when keys are pressed — not the specific keys that are pressed. This creates a form of biometric identification that makes one user different from another. TikTok told Snopes that this practice is “fundamentally” different from keylogging, a type of monitoring originally developed in the mid-1970s by the Soviet Union that’s still used today by hundreds of popular sites.

Nicky Watson, the co-founder and chief architect of consent management platform Syrenis, told Mashable that “the biometrics space is growing rapidly, and the accuracy [at which] it can verify identities is unmatched.”

“However, with more companies collecting biometrics, a whole new set of data privacy and security risks need to be addressed, including security breaches, identity theft, impersonation, and fraud. Biometrics’ inability to be revoked or reset, unlike compromised passwords, makes it a much higher-stakes form of identification,” Watson said.

What does DeepSeek do with my data?

It uses all this information for various purposes, like ensuring it sends users relevant advertising and notifying them about changes to its services, which is pretty typical. But it will also “comply with our legal obligations, or as necessary to perform tasks in the public interest, or to protect the vital interests of our users and other people.” Moreover, its “corporate group” can access its data and share information with law enforcement agencies.

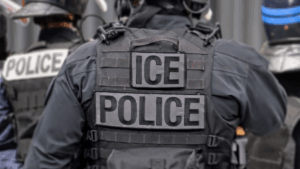

Many companies include that blanket statement in their privacy policies, but DeepSeek’s data is stored “in secure servers located in the People’s Republic of China.” China has some notable legal requirements under its cybersecurity and privacy laws, including laws that demand tech companies cooperate with national intelligence efforts. This, combined with the current TikTok ban, leads to propaganda fears. For example, you can’t ask DeepSeek questions about the 1989 Tiananmen Square massacre.

“DeepSeek’s privacy policy openly states that the wide array of user data they collect goes to servers in China,” Watson said. “This alone raises big questions about how that data could be used beyond just running the app. It’s so easy to get swept up in the hype of a new trending AI tool and accept the terms of use without thinking twice, but this privacy policy should make users stop and ask: Am I giving away private details like my location, browsing activity, or even personal messages without realizing it?”

WIRED reviewed the website’s activity and found that DeepSeek sends data to the Chinese tech giant Baidu and the Chinese internet infrastructure firm Volces. And, as WIRED pointed out, the policy suggests that DeepSeek might also use user prompts to develop new models.

Why should an average user be concerned?

It’s easy to ignore the importance of data security. At its core, reading through the fine print of privacy terms and conditions can be remarkably boring, which is precisely what makes it so dangerous. DeepSeek is subject to government access under China’s cybersecurity laws, which mandate that companies provide access whenever the Chinese government demands it. We don’t know how many AI models are trained or how they operate, and that’s concerning, too, especially if your data could be misused or maliciously exploited.

Plus, you don’t want your identity stolen. You don’t want your bank account information in the wrong hands. Let’s say you give an abundant amount of personal information to a chatbot or use a credit card to pay for it, and that data is stored by the company and later hacked or improperly shared — you could be in trouble. In a situation like that, at best, it’s really annoying to change your passwords; at worst, you’re out of your life’s savings. A company like DeepSeek, or even Meta or OpenAI, might not actively steal your information to take your identity. Still, cyberattacks happen — and if they’re hosting your data, your data can be taken. Just yesterday, DeepSeek faced “large-scale malicious attacks,” which forced the company to temporarily limit new registrations.

“There is always the risk of cyberattacks,” Warmenhoven said. “As AI platforms become more sophisticated, they also become prime targets for hackers looking to exploit user data or the AI itself. With the rise of deepfakes and other AI-driven tools, the stakes are higher than ever.”

What can an average user do? Is this worse than what all the other tech giants do?

“It shouldn’t take a panic over Chinese AI to remind people that most companies in the business set the terms for how they use your private data,” John Scott-Railton, a senior researcher at the University of Toronto’s Citizen Lab, told WIRED. “And that when you use their services, you’re doing work for them, not the other way around.”

Safeguarding your data from DeepSeek is probably a good idea — but experts urge users not to stop there.

“To mitigate these risks, users should adopt a proactive approach to their cybersecurity,” Warmenhoven suggested. “This includes scrutinizing the terms and conditions of any platform they engage with, understanding where their data is stored and who has access to it.”

However, ultimately, it shouldn’t be up to an individual. As F. Mario Trujillo, a staff attorney for the Electronic Frontier Foundation, told Mashable, “When you type intimate thoughts and questions to a chatbot or search engine, that content should be protected and not be unnecessarily used or shared. The best way to do that is to enact strong data privacy laws that apply to all companies, whether it be Google, OpenAI, TikTok, or DeepSeek.”

Protecting your privacy shouldn’t be up to you alone. Stronger data privacy laws would help everyone, whether it concerns apps located in China or apps with similarly questionable data privacy laws in the U.S., like Meta and OpenAI.