People keep telling me to watch The Pitt, and now that the show is tackling generative AI in healthcare, I must. As Mashable’s tech editor, I field a lot of questions about generative AI, and I also spend a lot of time talking to people who are extremely enthusiastic about artificial intelligence, and people who are extremely hostile to AI.

So, what does the latest episode of The Pitt Season 2 get right about AI in medicine, and what does it get wrong?

How AI factors into The Pitt episode “8:00 AM.”

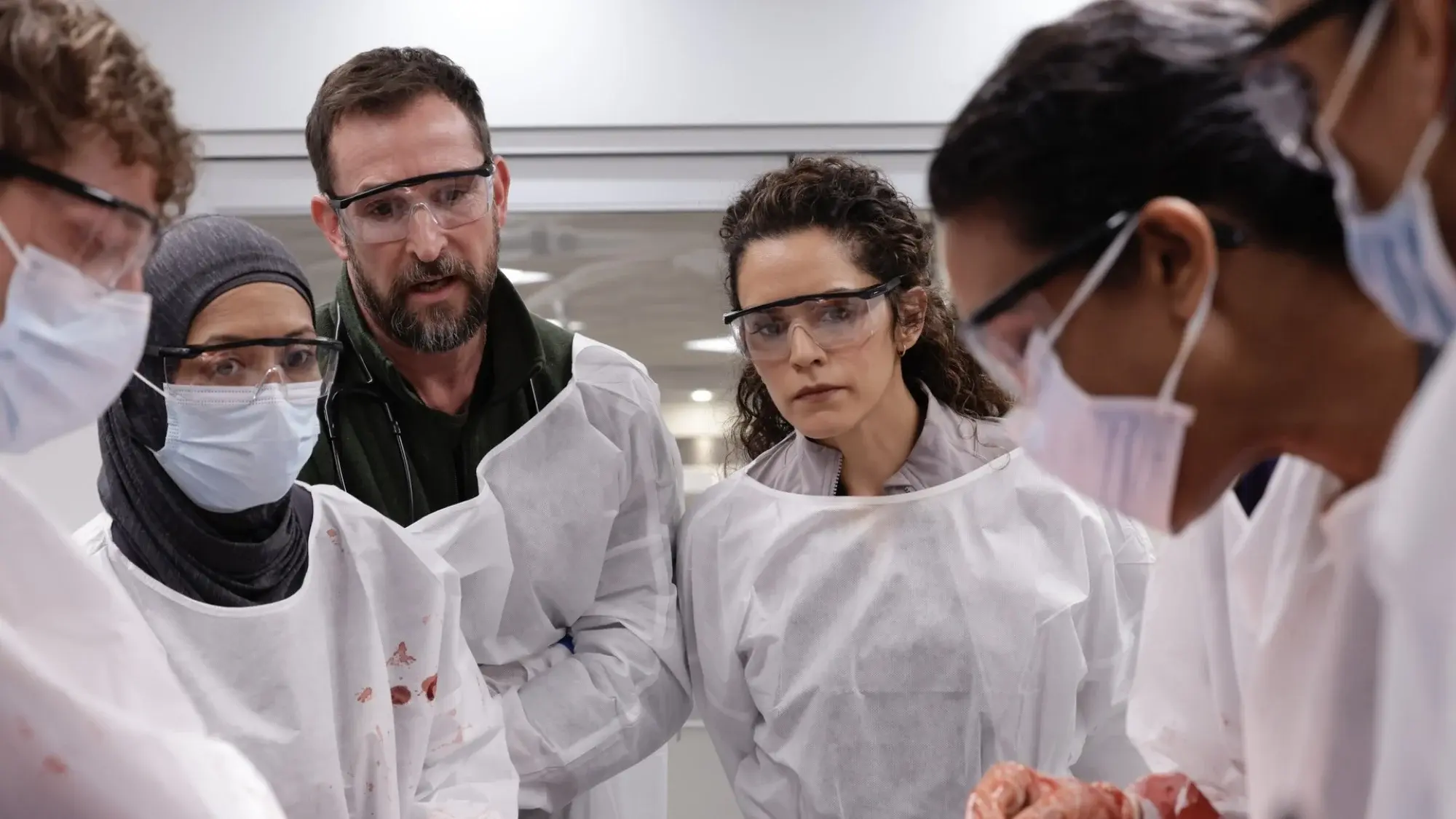

Credit: Warrick Page / HBO Max

AI wasn’t the entire focus of Season 2, episode two, “8:00 AM.” We also get to see a nun with gonorrhea in her eye, an unhoused man with a colony of maggots inside his moldy arm cast, and a clear view of an erection, which, of course, has lasted more than four hours.

However, one of the plotlines focuses on newcomer Dr. Baran Al-Hashimi’s (Sepideh Moafi) attempt to modernize the ER and integrate AI into patient care. She introduces an AI app that automatically listens to patient visits and summarizes the pertinent details in their charts.

When an excited student says, “Oh my god, do you know how much time this will save?”, Dr. Al-Hashimi has an answer: With the new AI app, the ER doctors will spend 80 percent less time charting. Later in the episode, the good doctor tells Dr. Robby (Noah Wyle) that it will also allow physicians to spend 20 percent more time with patients.

So far, so good, but the app immediately makes a mistake. It documents the wrong medication in the patient’s chart, substituting a similar-sounding medication instead.

This doesn’t diminish Dr. Al-Hashimi’s enthusiasm at all. “Generative AI is 98 percent accurate at present,” she says. “You must always carefully proofread and correct minor errors. It’s excellent but not perfect.”

AI transcription is really good (and it still makes mistakes).

Dr. Al-Hashimi states that generative AI is 98 percent accurate. But is this really true?

Fact-checking Dr. Al-Hashimi’s claim is actually tricky, because it’s not entirely clear what she means. If she’s referring purely to AI transcription, she’s closer to the truth. That’s a task that generative AI excels at.

Last year, a group of researchers conducted a systematic review of 29 studies that measured AI transcription accuracy specifically in healthcare settings. (You can check out the entire review, published in the BMC Medical Informatics and Decision Making journal.)

Some of those studies did find accuracy rates of 98 percent or higher, and some found significant time savings for doctors. However, those studies involved controlled, quiet environments. In multi-speaker environments with a lot of crosstalk and medical jargon, like you’d find in a crowded emergency room, accuracy rates were much lower, sometimes as low as 50 percent.

Still, rapid advancements in large-language models are improving AI transcriptions all the time. So, we could be generous and say Dr. Al-Hashimi’s claim is close to the truth, for the latest LLM models, in certain settings.

Generative AI is definitely not 98 percent accurate.

Let’s take the latest version of ChatGPT as an example. When the GPT-5.2 model was released a few months ago, OpenAI published documentation on the model’s tendency to hallucinate and provide false information.

According to OpenAI, its GPT-5.2 Thinking model has an average hallucination rate of 10.9 percent. That’s really high, especially considering that OpenAI wants ChatGPT to help you with medical questions. (The company recently launched ChatGPT Health, a “dedicated experience in ChatGPT designed for health and wellness.”)

Now, when GPT-5.2 Thinking is given access to the internet, its hallucination rate drops to 5.8 percent. But would you trust a doctor who’s wrong 5.8 percent of the time — and only when they can use the internet to check their work? And would you want your AI healthcare app to be connected to the internet at all?

Generative AI may one day be 98 percent accurate. But we’re not there yet.

Generative AI can’t replace doctors.

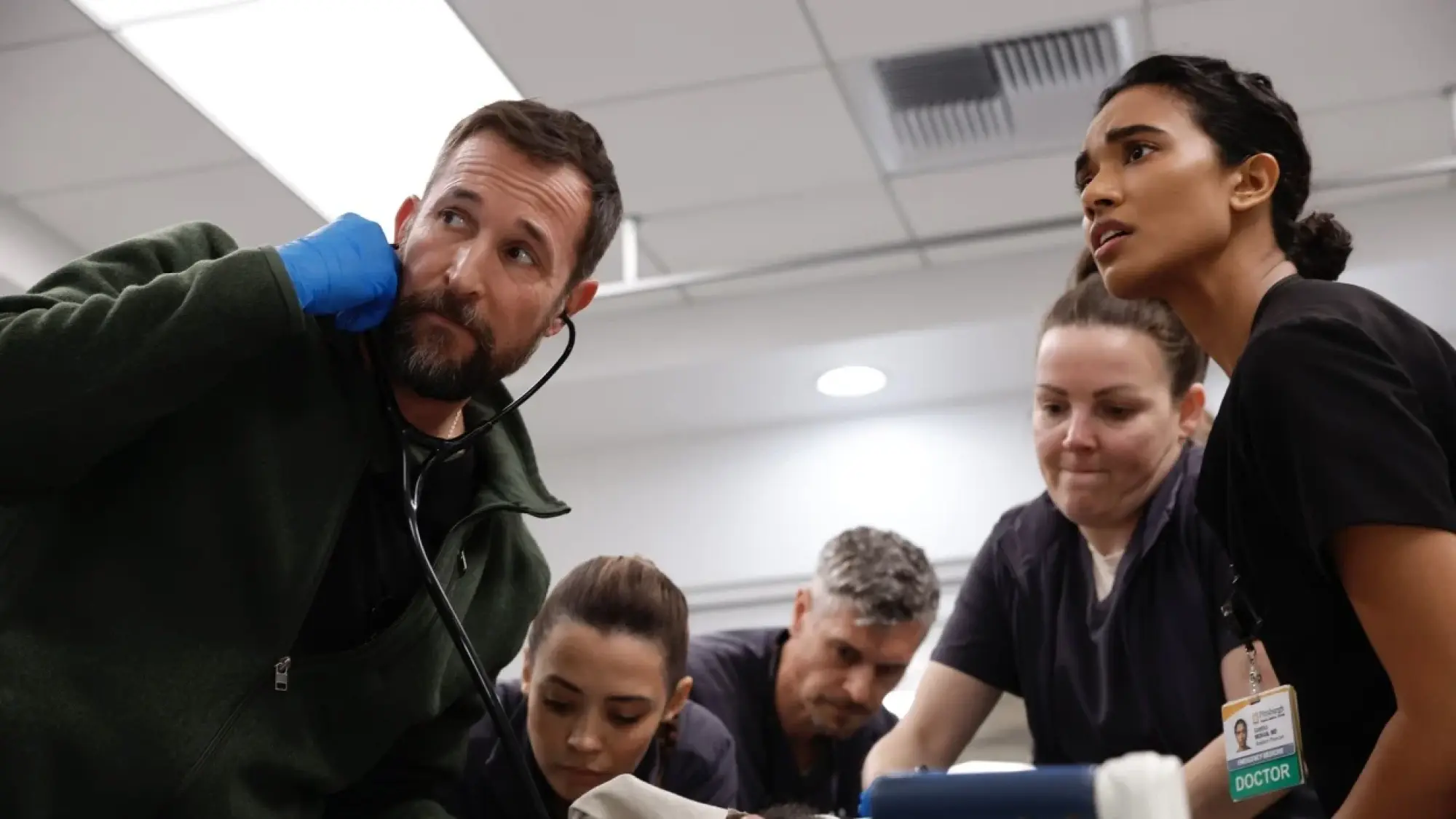

Credit: Warrick Page / HBO Max

Dr. Robby and other characters have a conversation about “gut” instincts in this episode. That’s something that generative AI can’t replicate, and one reason that many people don’t want to see AI tools replacing human workers entirely, whether in the arts or medicine.

The episode also spends a lot of time showing examples of empathy. The best doctors don’t just have an encyclopedic knowledge of their specialty. They don’t just have good gut instincts. They’re true healers, who know that holding a patient’s hand at the right moment can be just as important as the right diagnosis.

To be fair, most AI healthcare tools I’ve seen aren’t trying to replace doctors. Instead, they want to give healthcare workers more diagnostic tools and save them time. As Dr. Al-Hashimi says repeatedly in the episode, she wants to give doctors more time at their patients’ bedside.

AI can help save doctors time.

Radiology, or medical imaging, is one of the most promising applications for generative AI in healthcare settings. When radiologists at Northwestern University implemented a custom generative AI tool to help analyze X-rays and CT scans, their productivity increased by 40 percent — and without compromising accuracy.

I think even a lot of AI skeptics would agree that’s a positive result, for patients and doctors.

The Pitt does seem to be setting up Dr. Al-Hashimi as something of a villain — or, at least, a foil for Dr. Robby — but good medicine and generative AI aren’t necessarily in conflict. As with any tool, it can be extraordinarily helpful — or extraordinarily dangerous.

Disclosure: Ziff Davis, Mashable’s parent company, in April 2025 filed a lawsuit against OpenAI, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.